Cross-linguistic patterns of speech prosodic differences in autism (A machine learning study)

Abstract

Differences in speech prosody are a widely observed feature of Autism Spectrum Disorder (ASD). However, it is unclear how prosodic differences in ASD manifest across different languages that demonstrate cross-linguistic variability in prosody.

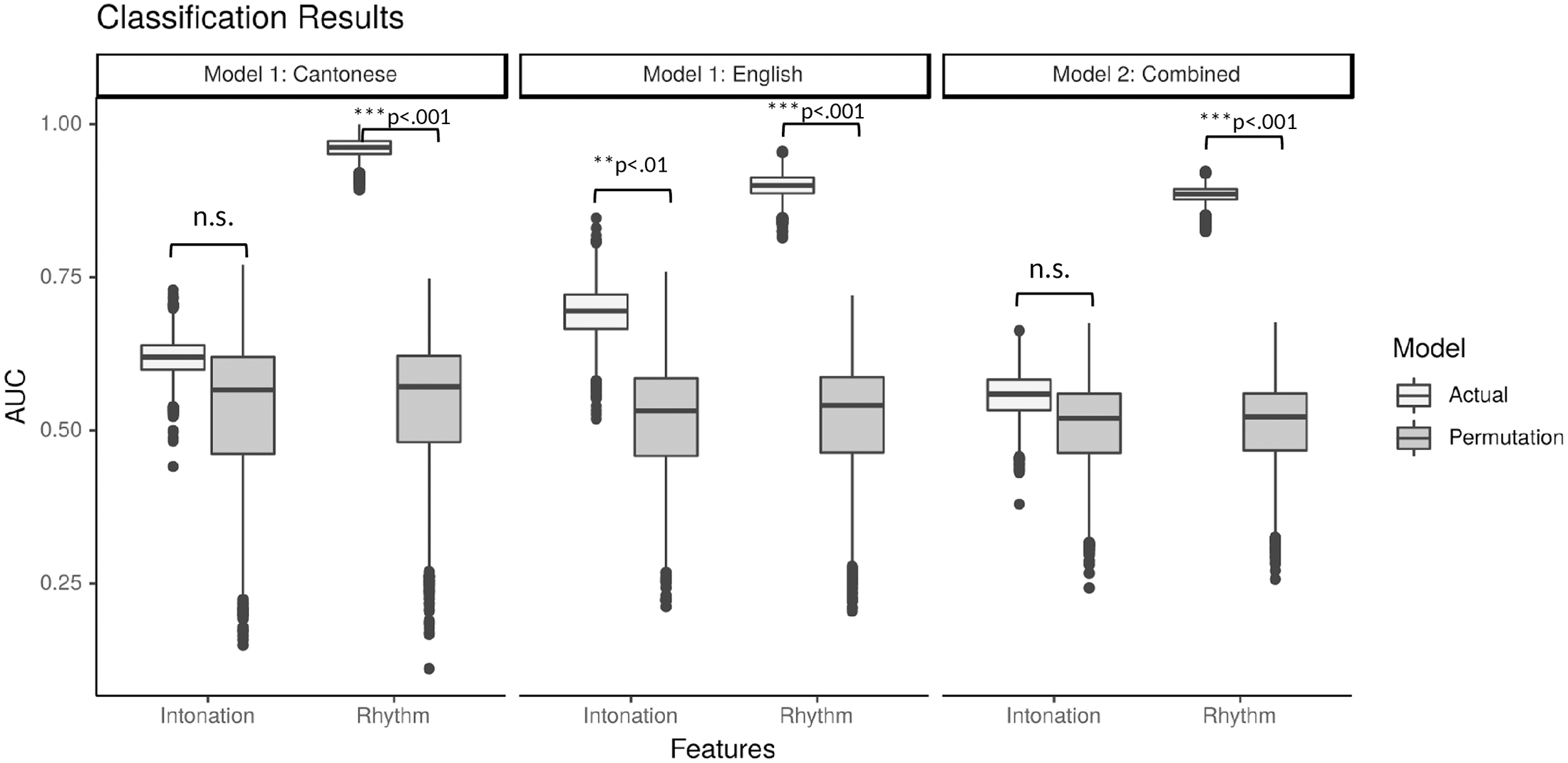

Using a supervised machine-learning analytic approach, we examined acoustic features relevant to rhythmic and intonational aspects of prosody derived from narrative samples elicited in English and Cantonese, two typologically and prosodically distinct languages.

Our models revealed successful classification of ASD diagnosis using rhythm-relative features within and across both languages. Classification with intonation-relevant features was significant for English but not Cantonese. Results highlight differences in rhythm as a key prosodic feature impacted in ASD, and also demonstrate important variability in other prosodic properties that appear to be modulated by language-specific differences, such as intonation.

Acoustic feature extraction

Speech Rhythm:

- Defined as durational variations across syllables in speech, reflecting both linguistic and affective properties.

- Represented by the temporal envelope of the speech signal, which shows regularities in syllabic rhythm, especially in the 2–8 Hz range—key for neural processing of intelligible speech.

- Three rhythmic measures extracted:

- Envelope Spectrum (ENV)

- Intrinsic Mode Functions (IMF)

- Temporal Modulation Spectrum (TMS)

- 8640 rhythm-relevant features were extracted from the participants’ utterances.

Intonation:

- Refers to the variation of voice pitch (fundamental frequency, f0) over time, crucial for prosody (speech melody and emotion).

- Instead of single pitch values (mean/variance), entire f0 contours (multivariate) across utterances were analyzed to capture linguistic and affective prosody.

- For each participant, 20 time-normalized f0 values per utterance were extracted, resulting in 400 intonation-relevant features per participant.

source: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0269637

#ASD #autism #language #speech #machine-learning #neurodivergence