Perceptual Straightening Hypothesis of the brain

Natural video inputs constantly change in complex, nonlinear ways across time. The visual system, however, must make short-term predictions to guide behavior.

According to the temporal straightening hypothesis, the brain reformats these inputs into smoother, more predictable trajectories within neural representation space. This straightening minimizes curvature over time, effectively stabilizing perception and facilitating prediction of future frames.

Experimental Evidence

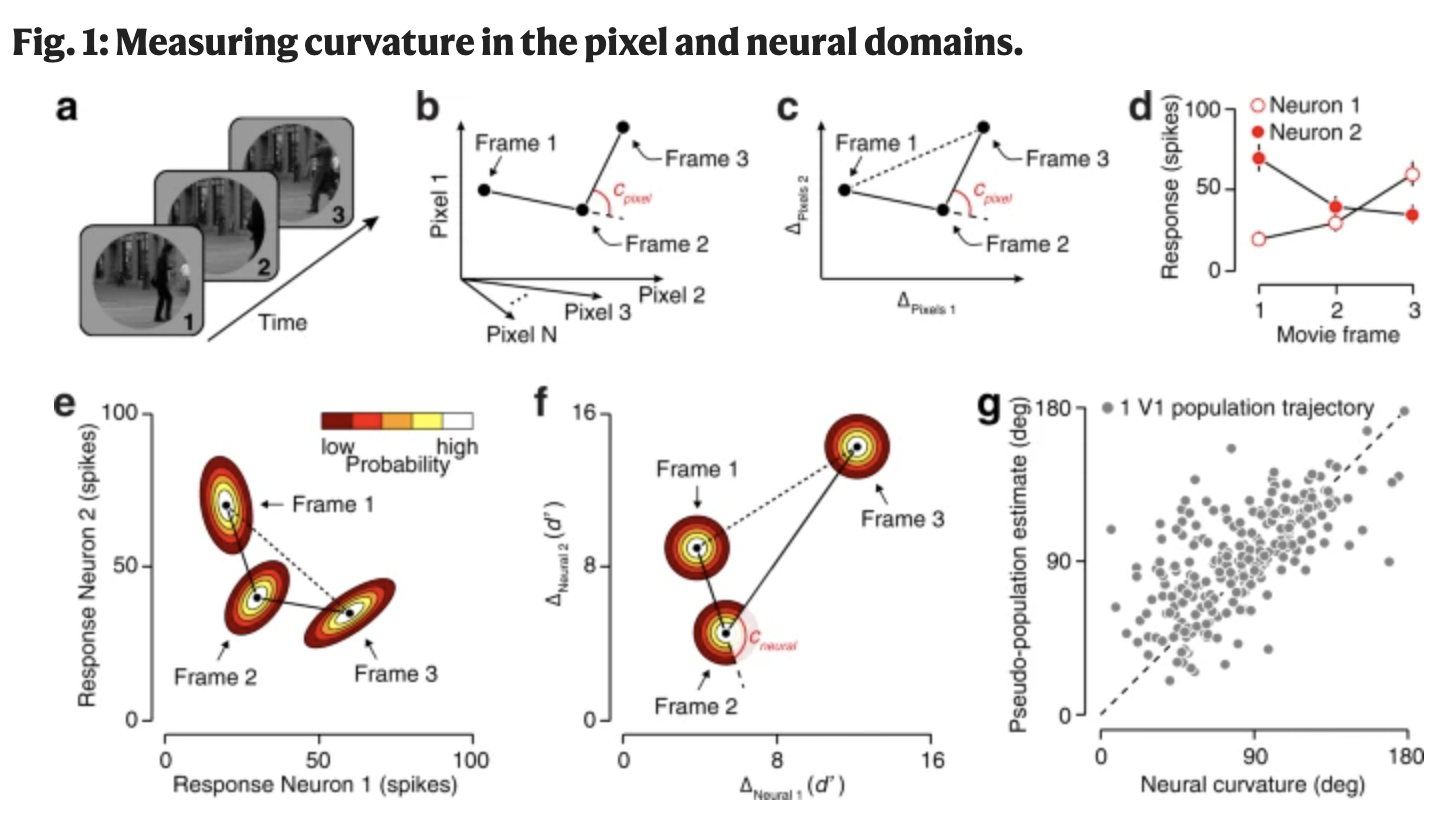

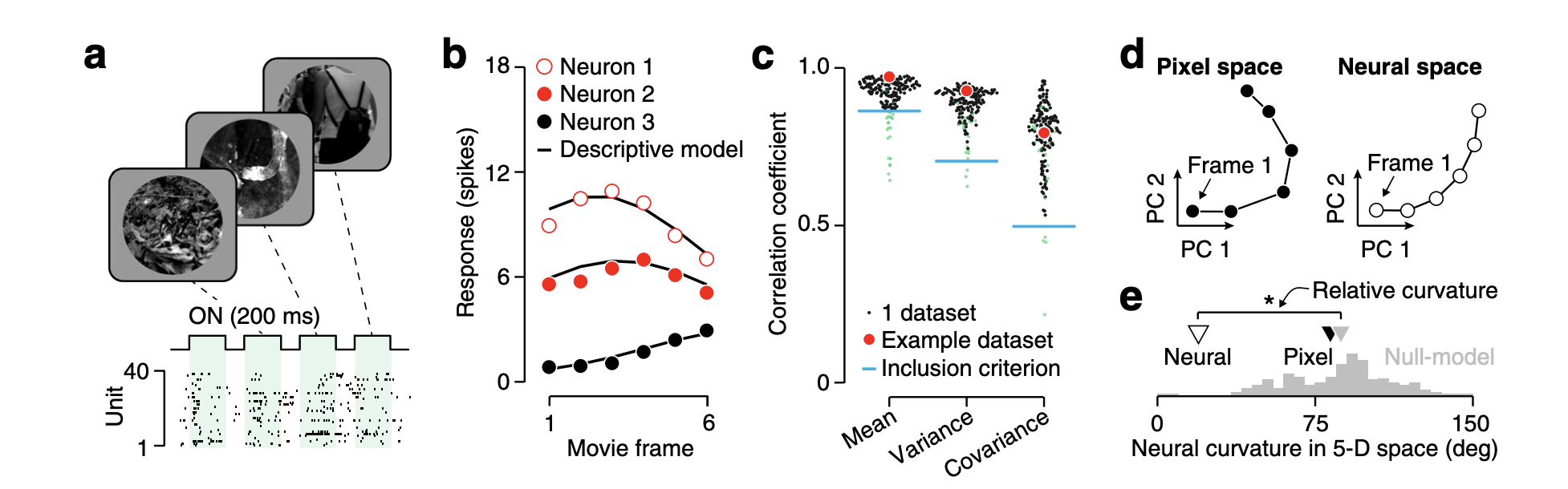

Hénaff and Simoncelli’s experiments quantified perceptual curvature by asking human subjects to judge visual trajectories derived from sequences of natural and synthetic images. They demonstrated three key results :

- Highly curved natural video sequences (in pixel intensity space) appear straighter perceptually.

- Artificial sequences that are straight in pixel space appear more curved perceptually.

- Naturalistic sequences that are physically straight remain relatively less curved perceptually.

Population-level models of early visual processing, including center-surround filtering and nonlinear gain control, replicated these perceptual results—suggesting that temporal straightening emerges as a fundamental property of the early visual hierarchy.

Neural Mechanisms

A later study (Nature Communications, 2021) directly examined this phenomenon in the macaque primary visual cortex (V1). Using multi-electrode recordings, the researchers found that neural population activity transforms sequences of natural videos into trajectories with reduced curvature, confirming that temporal straightening occurs at the earliest cortical level. Synthetic or unnatural motion, by contrast, produced more tangled trajectories. The study identified nonlinear pooling and divisive normalization as crucial to these effects.

Computational and AI Relevance

Perceptual straightening connects closely to predictive coding Predictive coding and Alpha rhytm and temporal stability theories of perception. It implies that the brain builds an internal geometry optimized for smooth dynamics—reflecting its learned model of the physical world. Recent computational studies note that even self-supervised vision transformers display analogous straightening, meaning AI systems can spontaneously develop similar inductive biases toward "intuitive physics" without explicit supervision.

Summary Table

| Aspect | Description |

|---|---|

| Core hypothesis | Visual system straightens temporal trajectories of natural inputs |

| Key evidence | Human perceptual judgments and V1 neural recordings |

| Neural mechanism | Nonlinear pooling, divisive normalization, predictive coding |

| Function | Enhances temporal predictability and perceptual stability |

| AI analogues | Similar inductive bias observed in self-supervised vision models |